SOG Command Processor¶

The SOG command processor, SOG, is a command line tool for doing various operations associated with SOG.

Installation¶

See Installation.

Available Commands¶

The command SOG or SOG –help produces a list of the available SOG options and sub-commands:

$ SOG --help

usage: SOG [-h] [--version] {run,read_infile} ...

optional arguments:

-h, --help show this help message and exit

--version show program's version number and exit

sub-commands:

{run,read_infile}

run Run SOG with a specified infile.

read_infile Print infile value for specified key.

Use `SOG <sub-command> --help` to get detailed help about a sub-command.

For details of the arguments and options for a command use SOG <command> –help. For example:

$ SOG run --help

usage: SOG run [-h] [--dry-run] [-e EDITFILE] [--legacy-infile] [--nice NICE]

[-o OUTFILE] [--version] [--watch]

EXEC INFILE

Run SOG with INFILE. Stdout from the run is stored in OUTFILE which defaults

to INFILE.out. The run is executed WITHOUT showing output.

positional arguments:

EXEC SOG executable to run. May include relative or

absolute path elements. Defaults to ./SOG.

INFILE infile for run

optional arguments:

-h, --help show this help message and exit

--dry-run Don't do anything, just report what would be done.

-e EDITFILE, --editfile EDITFILE

YAML infile snippet to be merged into INFILE to change

1 or more values. This option may be repeated, if so,

the edits are applied in the order in which they

appear on the command line.

--legacy-infile INFILE is a legacy, Fortran-style infile.

--nice NICE Priority to use for run. Defaults to 19.

-o OUTFILE, --outfile OUTFILE

File to receive stdout from run. Defaults to

./INFILE.out; i.e. INFILE.out in the directory that

the run is started in.

--version show program's version number and exit

--watch Show OUTFILE contents on screen while SOG run is in

progress.

You can check what version of SOG you have installed with:

$ SOG --version

SOG run Command¶

The SOG run command runs the SOG code executable with a specified infile. If you have compiled and linked SOG in SOG-code, and you want to run a test case using your test infile SOG-test/infile.short, use:

$ cd SOG-test

$ SOG run ../SOG-code/SOG infile.short

That will run SOG using infile.short as the infile. The screen output (stdout) will be stored in infile.short.out. It will not be displayed while the run is in progress. The command prompt will not come back until the run is finished; i.e. the SOG run command will wait until the end of the run before letting you do anything else in that shell.

SOG run has some options that let you change how it acts:

$ SOG run --help

usage: SOG run [-h] [--dry-run] [-e EDITFILE] [--legacy-infile] [--nice NICE]

[-o OUTFILE] [--version] [--watch]

EXEC INFILE

Run SOG with INFILE. Stdout from the run is stored in OUTFILE which defaults

to INFILE.out. The run is executed WITHOUT showing output.

positional arguments:

EXEC SOG executable to run. May include relative or

absolute path elements. Defaults to ./SOG.

INFILE infile for run

optional arguments:

-h, --help show this help message and exit

--dry-run Don't do anything, just report what would be done.

-e EDITFILE, --editfile EDITFILE

YAML infile snippet to be merged into INFILE to change

1 or more values. This option may be repeated, if so,

the edits are applied in the order in which they

appear on the command line.

--legacy-infile INFILE is a legacy, Fortran-style infile.

--nice NICE Priority to use for run. Defaults to 19.

-o OUTFILE, --outfile OUTFILE

File to receive stdout from run. Defaults to

./INFILE.out; i.e. INFILE.out in the directory that

the run is started in.

--version show program's version number and exit

--watch Show OUTFILE contents on screen while SOG run is in

progress.

The --dry-run option tells you what the SOG command you have given would do, but doesn’t actually do anything. That is useful for debugging when things don’t turn out like you expected, or for checking beforehand.

The --editfile option allows 1 or more YAML infile snippets to be merged into the YAML infile for the run. See YAML Infile Editing for details.

The --legacy-infile option skips the YAML processing of the infile, allowing the run to be done with a legacy, Fortran-style infile.

The --nice option allows you to set the priority that the operating system will assign to your SOG run. It defaults to 19, the lowest priority, because SOG is CPU-intensive. Setting it to run at low priority preserves the responsiveness of your workstation, and allows the operating system to share resources efficiently between one or more SOG runs and other processes.

The -o or --outfile option allows you to specify the name of the file to receive the screen output (stdout) from the run.

The --version option just returns the version of SOG that is installed.

The --watch option causes the contents of the output file that is receiving stdout to be displayed while the run is in progress.

YAML Infile Editing¶

The --editfile option (-e for short) of the SOG run command allows 1 or more YAML infile snippets to be merged into the YAML infile for the run. For example,

$ cd SOG/SOG-test-RI

$ SOG run ../SOG-code-code/SOG ../SOG-code-ocean/infile.yaml -e ../SOG-code-ocean/infileRI.yaml

would run the repository version of the Rivers Inlet model by applying the SOG-code-ocean/infileRI.yaml edits to the base SOG-code-ocean/infile.yaml infile.

The --editfile option may be used multiple times, if so, the edits are applied to the infile in the order that they appear on the command line. The commands

$ cd SOG/SOG-test-RI

$ SOG run ../SOG-code-code/SOG ../SOG-code-ocean/infile.yaml -e ../SOG-code-ocean/infileRI.yaml -e tweaks.yaml

would run the repository version of the Rivers Inlet model with the extra value changed defined in SOG-test-RI/tweaks.yaml.

The intent of the YAML infile editing feature is that runs can generally use the respository infile.yaml as their base infile and adjust the parameter values for the case of interest by supplying 1 or more YAML edit files.

YAML edit files need only contain the “key paths” and values for the parameters that are to be changed. Example:

# edits to create a SOG code infile for a 300 hr run starting at

# cruise 04-14 station S3 CTD cast (2004-10-19 12:22 LST).

#

# This file is primarily used for quick tests during code

# development and refactoring.

end_datetime:

value: 2004-11-01 00:22:00

timeseries_results:

std_physics:

value: timeseries/std_phys_SOG-short.out

user_physics:

value: timeseries/user_phys_SOG-short.out

std_biology:

value: timeseries/std_bio_SOG-short.out

user_biology:

value: timeseries/user_bio_SOG-short.out

std_chemistry:

value: timeseries/std_chem_SOG-short.out

profiles_results:

profile_file_base:

value: profiles/SOG-short

halocline_file:

value: profiles/halo-SOG-short.out

hoffmueller_file:

value: profiles/hoff-SOG-short.dat

SOG batch Command¶

The SOG batch command runs a series of SOG code jobs, possibly using concurrent processes on multi-core machines. The SOG jobs to run are described in a YAML file that is passed on the command line. To run the jobs described in my_SOG_jobs.yaml, use:

$ cd SOG-test

$ SOG batch my_SOG_jobs.yaml

SOG batch has some options that let you change how it acts:

$ SOG batch --help

usage: SOG batch [-h] [--dry-run] [--debug] batchfile

positional arguments:

batchfile batch job description file

optional arguments:

-h, --help show this help message and exit

--dry-run Don't do anything, just report what would be done.

--debug Show extra information about the building of the job commands

and their execution.

Batch Job Description File Structure¶

SOG batch job description files are written in YAML. They contain a collection of top level key-value pairs that define default values for all jobs, and nested YAML mapping blocks that describe each job to be run. Example:

max_concurrent_jobs: 4

SOG_executable: /data/dlatornell/SOG-projects/SOG-code/SOG

base_infile: /data/dlatornell/SOG-projects/SOG-code/infile_bloomcast.yaml

jobs:

- average bloom:

- early bloom:

edit_files:

- bloomcast_early_infile.yaml

- late bloom:

edit_files:

- bloomcast_late_infile.yaml

This file would result in:

- up to 4 jobs being run concurrently.

- The SOG executable for the jobs would be /data/dlatornell/SOG-projects/SOG-code/SOG

- The base infile for all jobs would be /data/dlatornell/SOG-projects/SOG-code/infile_bloomcast.yaml

- The 1st job would be logged as average bloom. It would have no infile edits applied, and its stdout output would be directed to infile_bloomcast.yaml.out.

- The 2nd job would be logged as early bloom. Infile edits from bloomcast_early_infile.yaml would be applied, and its stdout output would go to bloomcast_early_infile.yaml.out.

- The 3rd would be logged as late bloom with edits from bloomcast_late_infile.yaml and stdout output going to bloomcast_late_infile.yaml.out.

The top level key-value pairs that set defaults for jobs are all optional. The keys that may be used are:

max_concurrent_jobs:

The maximum number of jobs that may be run concurrently. If the max_concurrent_jobs key is omitted its value defaults to 1 and the jobs will be processed sequentially. As a guide, the value of max_concurrent_jobs should be less than or equal to the number of cores on the worker machine (or the number of virtual cores on machines with hyper-threading). See Notes on SOG batch Performance for information on some tests of SOG batch on various worker machines.

SOG_executable:

The SOG code executable to run. The path to the executable may be specified as either a relative or an absolute path. If SOG_executable is excluded from the top level of the file it must be specified for each job. If it is included in both the top level and in a job description, the value in the job description takes precedence.

base_infile:

The base YAML infile to use for the jobs. The path to the base infile may be specified as either a relative or an absolute path. If base_infile is excluded from the top level of the file it must be specified for each job. If it is included in both the top level and in a job description, the value in the job description takes precedence.

edit_files:

The beginning of a list of YAML infile edit files to use for the jobs. The paths to the edit infiles may be specified as either relative or absolute paths. Exclusion of edit_infiles from the top level of the file means that it is an empty list. If it is included in both the top level and in a job description, the list elements in the job description are appended, in order, to the list specified at the top level.

legacy_infile:

When True the job infiles are handled as legacy Fortran-ish infiles. When False they are handled as YAML infiles. If legacy_infile is excluded from the top level of the file its value defaults to False. If the value is True in the top level, the default base_infile and edit_infiles keys have no meaning and therefore must be excluded, furthermore, a base_infile key must be included for each job. If the value is True in an individual job description, the edit_files key for that job is meaningless and must be excluded, furthermore, a base_infile key must be included for that job. This is a seldom used option that is included for backward compatibility.

nice:

The nice level to run the jobs at. If the nice key is omitted its value defaults to 19. If it is included in both the top level and in a job description, the value in the job description takes precedence. This is a seldom used option since jobs should generally be run at nice 19, the lowest priority, to minimize contention with interactive use of workstations.

The other part of the YAML batch job description file is a block mapping with the key jobs. It is a required block. It contains a list of mapping blocks that describe each of the jobs to be run. The key of each block in the jobs list is used in the SOG batch command logging output, so it is good practice to make it descriptive.

The contents of each job-name block specify the values of the parameters to be used for the run. The values for parameters not specified in the job-name block are taken from the top level key-value pairs described above. If a parameter is specified in both the job-name block and the top level of the file, the value from the latter block takes precedence. The exception to that is the edit_files parameter. The list of YAML infile edit files in a job-name block is appended to the list specified at the top level. The max_concurrent_jobs key cannot be used in the jobs section. Each job-name block can contain 1 other key-value pair in addition to those described above:

outfile:

The name of the files to which the stdout output of the job is to be stored in. The path to the outfile may be specified as either a relative or an absolute path. Exclusion of the outfile key-value pair results in the stdout output of the job being stored in a file whose name is the last file in the edit_files list for the job with .out appended. If there are no YAML infile edit files, the output will be stored in a files whose name is the base_infile with .out appended.

Notes on SOG batch Performance¶

When the SOG batch command was implemented in late August of 2013 a series of test were conducted on 3 worker machines to explore the effect on run time of running various numbers of jobs concurrently.

The machines tested were cod, salish, and tyee. The changeset hash of the SOG-code repository that was used for the tests was a0ea801cf23b. The tests consisted of running various numbers of the same job concurrently and measuring their wall-clock and system running times with the time command. There were no other users on the machines when the tests were run, and the tests on cod and tyee were not run at the same time to avoid contention for access to storage on ocean. The test job was to run with SOG-code/infile.yaml and an edit file that caused the timeseries and profiles output files to be written to different files for each job. The batch job description files were constructed so that the stdout output from each job also went to a separate file. Note, however, that the S_riv_check, salinity_check, and total_check files were written contentiously by all running jobs because those file names are hard-coded in SOG-code.

A Python script (build_test_files.py) was used to generate the batch job description files, and the YAML infile edit files for the tests. It and it’s output can be found in /ocean/dlatorne/SOG-projects/batch_test/ (or /data/dlatorne/SOG-projects/batch_test on salish). The results of the tests and the code to produce the graph images below is in results.py in the same directory.

The results discussed below are just snapshots to provide initial guidance on how many jobs to try to run concurrently. The tests were conducted under fairly ideal conditions with no other users on the machines and load network load (a Friday afternoon and Saturday morning in late August :-). If you are running planning to run a large number of jobs it may be worthwhile to conduct some experiments to determine the optimal level of concurrency for the machine(s) you plan to use, and the usage conditions they are operating under.

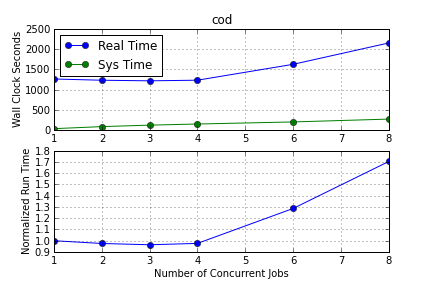

cod Test Results¶

cod is a 2.8 GHz 4-core machine that does not use hyper-threading, so it presents as having 4 CPUs. It has 8 Gb of RAM, and its local drive is a 7200 rpm SATA drive. Output storage for the tests was over the network to ocean via an NFS mount. When the tests were run cod was running Ubuntu 12.04.2 LTS as its operating system.

The graphs below show the wall-clock and system run times for various numbers of concurrent jobs from 1 to 8 run via SOG batch on cod, and the runtime normalized relative to the time for a single job:

As expected, up to 4 jobs running concurrently take the same time as a single job because SOG is, for the most part, a compute-bound model, and there is very little penalty for doing 1 run per core. What’s interesting is that “overloading” the processor with 6 or 8 concurrent jobs does not result in the normalized run times of 1.5 or 2 that might be expected. Presumably this is due to the operating system being able to swap among jobs quickly enough when there are I/O waits that 8 jobs can complete in less time than 2 x 4 jobs.

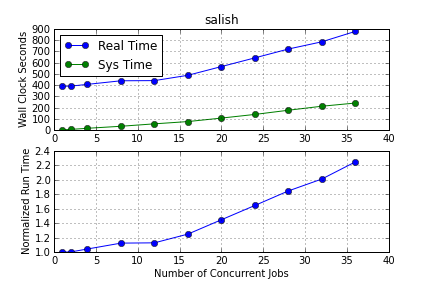

salish Test Results¶

salish is a 3.8 GHz 2 x 8-core machine with hyper-threading, so it presents as having 32 CPUs. It has 128 Gb of RAM, and it has local 10000 rpm SATA and SSD drives. Output storage for the tests was to the local SATA drive. When the tests were run salish was running Ubuntu 13.04 as its operating system.

The graphs below show the wall-clock and system run times for various numbers of concurrent jobs from 1 to 36 run via SOG batch on salish, and the runtime normalized relative to the time for a single job:

salish does not exhibit the same flat relationship as cod and tyee between run time and job count up to the number of physical cores. 8 and 12 concurrent jobs take about 12% longer per job than a single job, and 16 jobs takes almost 25% longer per job. The reason for this is unknown, however, the effect tails off somewhat as the number of concurrent jobs increases toward the number of virtual cores, with 32 jobs taking almost exactly twice as long to run as a single job. That means that it is more efficient to run 32 jobs concurrently than to do 2 runs of 16 concurrent jobs. “Overloading” the processor by running 36 concurrent jobs increases the run time proportionally to 225%.

Even though the normalized performance of salish may not look as attractive as that of tyee is must be noted that in absolute terms it is, by far, the fastest at running SOG.

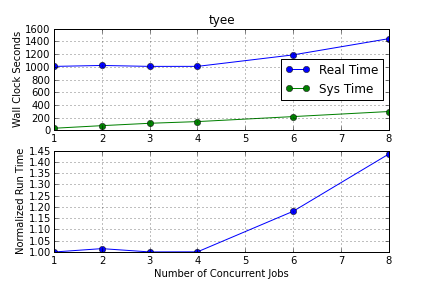

tyee Test Results¶

tyee is a 3.8 GHz 4-core machine with hyper-threading, so it presents as having 8 CPUs. It has 16 Gb of RAM, and its local drive is an SSD drive. Output storage for the tests was over the network to ocean via and NFS mount. When the tests were run tyee was running Ubuntu 12.04.2 LTS as its operating system.

The graphs below show the wall-clock and system run times for various numbers of concurrent jobs from 1 to 36 run via SOG batch on tyee, and the runtime normalized relative to the time for a single job:

Like cod, tyee takes the same amount of time to run 4 concurrent jobs as to run a single job. However, hyper-threading allows 6 or 8 concurrent jobs to be run with less slow-down than cod exhibits and 8 jobs is significantly faster than 2 x 4 jobs. “Overloading” of more concurrent jobs than virtual cores was not tested on tyee.

SOG read_infile Command¶

The SOG read_infile command prints the value associated with a key in the specified YAML infile. It is primarily for use by the SOG buildbot where it is used to get output file paths/names from the infile. Example:

$ cd SOG-code-ocean

$ SOG read_infile infile.yaml timeseries_results.std_physics

timeseries/std_phys_SOG.out

Source Code and Issue Tracker¶

Code repository: /ocean/sallen/hg_repos/SOG

Source browser: http://bjossa.eos.ubc.ca:9000/SOG/browser/SOG (login required)

Issue tracker: http://bjossa.eos.ubc.ca:9000/SOG/report (login required)